A Video Workflow for the RED

October 21, 2012

Despite rather infrequent posting, I still really enjoy writing this blog. It's something I do purely for my own desire to arrive at a more in-depth understanding of the problems we encounter in the field. Lately I've had very little time for this project combined with an ever expanding list of topics I'd like to cover. Moving into the new year, something I've been wanting to do is to open this site up to other writers who have ideas for pertinent, appropriate content. Interested? Let me know.

My first guest writer is Tom Wong, a Local 600 DIT working here on the east coast. He recently engineered a short film I produced with Kea Alcock and Zina Brown where he utilized a newly available on-set workflow for the EPIC camera which I'm excited about. My preferred way of working as an on-set colorist relies heavily on the immediacy of video where everyone on the set sees an image that will be reflected in dailies. Because of the post-centric workflow of the RED, this is not something that could be easily achieved until now. By using the software combination of LiveGrade and Scratch Lab, it's finally feasible to work in a more traditional, "video style" color correction workflow for the EPIC. For those who like to work this way, this is great. The advantage being that the Director of Photography can now make color decisions with the DIT as they are lighting and composing shots instead of taking the time, usually at the end of the day, to set the look for dailies before files are processed.

Thanks to director, Zina Brown, for letting me share images from his new film, Dreams of the Last Butterflies.

RED WORKFLOW

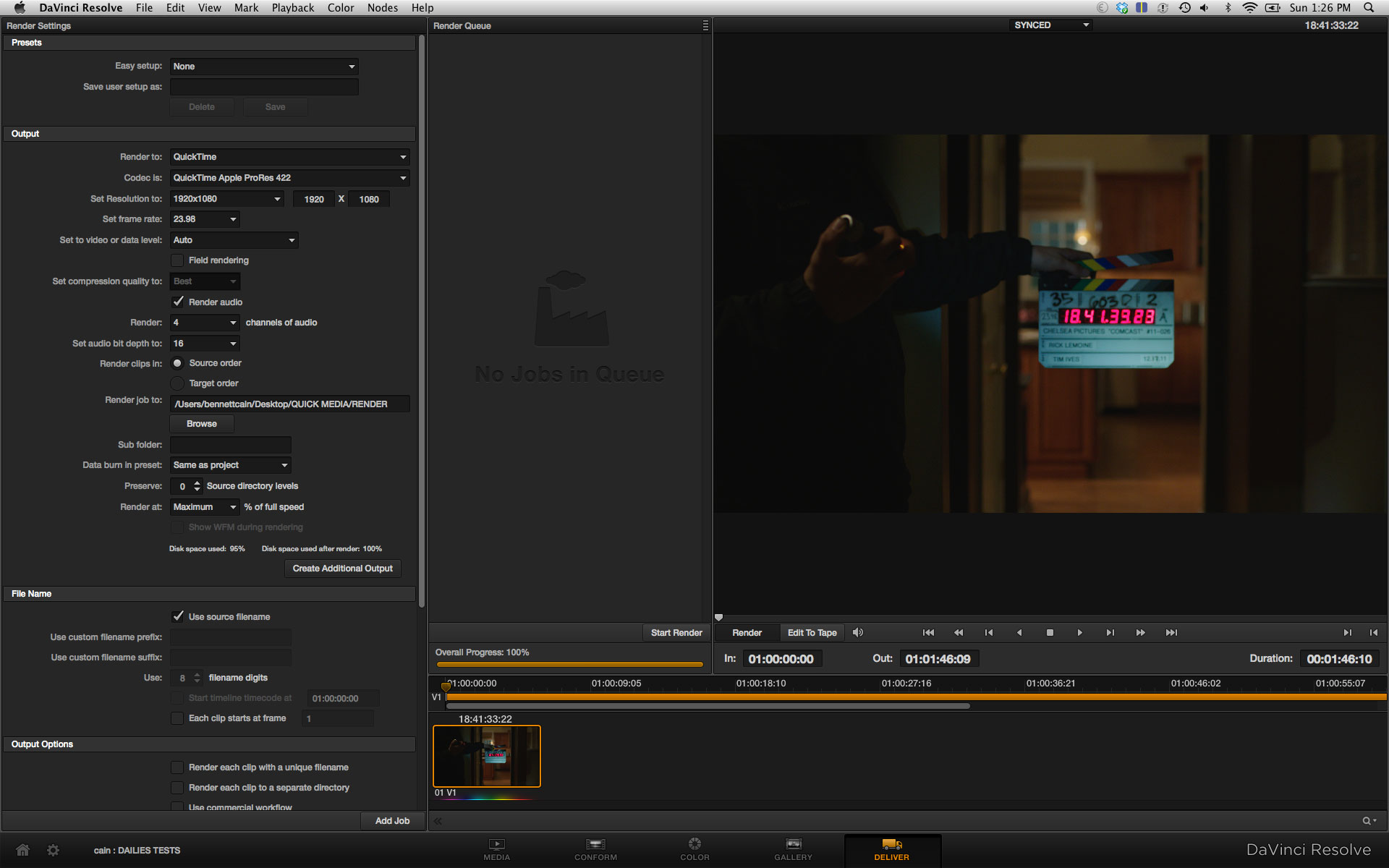

When doing looks with r3d files, we light the scene ideally with the subjects in there, create a test clip, put it to the computer and then apply color work on it. You can judge the exposure from there, or use the built in camera tools, then apply your look, get it approved, and then start applying that to the rest of the clips and do match from there. You can do your looks via Redcine X Pro, third party software like Scratch Lab, Da Vinci Resolve, Colorfront OSD/EXD, etc. Generally, you have access for metadata controls for the RAW to baseline your look, before you apply RGB alterations to it. This can produce cleaner, better results when working with r3d because you aren’t jumping into forcing the values.

LIVE GRADING WORKFLOW

With a camera like the Alexa, or anything similar where you are dealing with a "baked" RGB acquistion format in Log, you output a log signal from the camera, monitor that, paint that image using software/hardware. So it goes Log from camera to your cart, LUT, and you then output it back out from your cart to whoever needs it. You save your looks as CDL or 3DLUT, and this color correction data is then passed on through the chain for use with dailies, etc.

This method is one of the most common ways of working. It’s not limited to internal ProRes recording either, it’s also commonly used with ARRIRAW.

So when working with the traditional RED workflow, I like to call that a “untethered “ workflow. You aren’t paintboxing the image on a live signal. You shoot something, bring it to the computer, and then process there. All that information is stored within the metadata if you are using redcine x, and if most finishing software can just open up the r3d, and the RMD (metadata) information is right there automatically putting the finishing colorist in a good spot to start with. For other cameras this can be a little more difficult since RED has designed their workflow from the ground up to be the way it is. Passing along color decisions down the whole pipeline untethered can be done, but not quite as streamlined as dealing with r3d.

HYBRID VIDEO AND R3D WORKFLOW: WHY AND HOW

My goal this past year having done so many jobs the tethered way, was to figure out how to accomplish the same thing with working with EPIC. How can we start merging the workflows at the beginning of the chain?

WHY

Why fix it when it’s not broken you may ask? Well to me it’s always been a little broken. Honestly, and I’m sure many can agree with me, it’s tough to get people to run over to your cart to sign off on looks. DP’s are busy on set, Directors as well, and it diminishes client confidence when they look at a monitor, don’t like what they see, but have to force them to run over to the cart to show them what it REALLY will look like for the dailies and the starting point in the finish. Being tethered, that is color correcting a live video signal, keeps everybody on the same page, has everybody look at approximately the same thing and let’s you make adjustments immedietely. Thus affecting lighting decisions right away, compensating when exposure changes, etc . It’s instant and immediate, and I really believe you gain a lot more with this method.

Yes, you can load looks directly into the RED cameras, but you have to do it everytime you make any changes to the LUT. Ths is impractical in application.

Working on a live signal is just faster and problems that can be solved with lighting are easier to identify.

HOW

Back when Pomfort was beta testing LiveGrade, a now very popular software for on set live signal color correction, I was going through it and listing things I personally want from the software. One of the suggestions was figuring out a way to come up with Redgamma delog lut so that I can start applying the same workflow I’ve been doing in a “video” tethered workflow, avoiding and bypassing the Redcine X method all together. Originally I said it if we could save RMD’s out of LiveGrade that would be stellar. Unfortunetely that’s a all RED format and no one has their SDK to create these files outside of RED software. But, Pomfort was still paying attention, and in the latest update, they added new preset modes in your delog menu. I saw the variety of cameras in there, Alexa C-Log, Canon Log, S-Log, S-Log2, and then...Redgamma2, Redgamma3.

© 2021 Bennett Cain / All Rights Reserved /

© 2021 Bennett Cain / All Rights Reserved /