This is a great little device that's chronically underutilized as it gives you full remote access to the camera unlike the Web Browser ethernet interface which is very limited. The RCU-4 is powered through its control cable which I've used successfully at lengths up to 150'. This device makes white balancing the Alexa incredibly fast and efficient as it no longer need be done at the side of the camera.

Not to get too obvious with this.. Moving on.

Another approach is to manage color temperature by putting color correction gel - CTB, CTO, CTS, Plus Green, Minus Green - on light sources in order to alter those with undesirable color temperatures to produce the correct, color accurate response. Color correction tools, digital or practical, do not necessarily apply to the creative use of color temperature. Having mixed color temperatures in the scene is an artistic decision and one that can have a very desirable effect as it builds color contrast and separation into the image. Mixed color temperatures in the scene will result in an ambient color temperature lying somewhere in between the coolest and warmest source. Typically in theses scenarios, a "Reference White", or chroma-free white can be found by putting the camera white balance somewhere around this ambient color temperature.

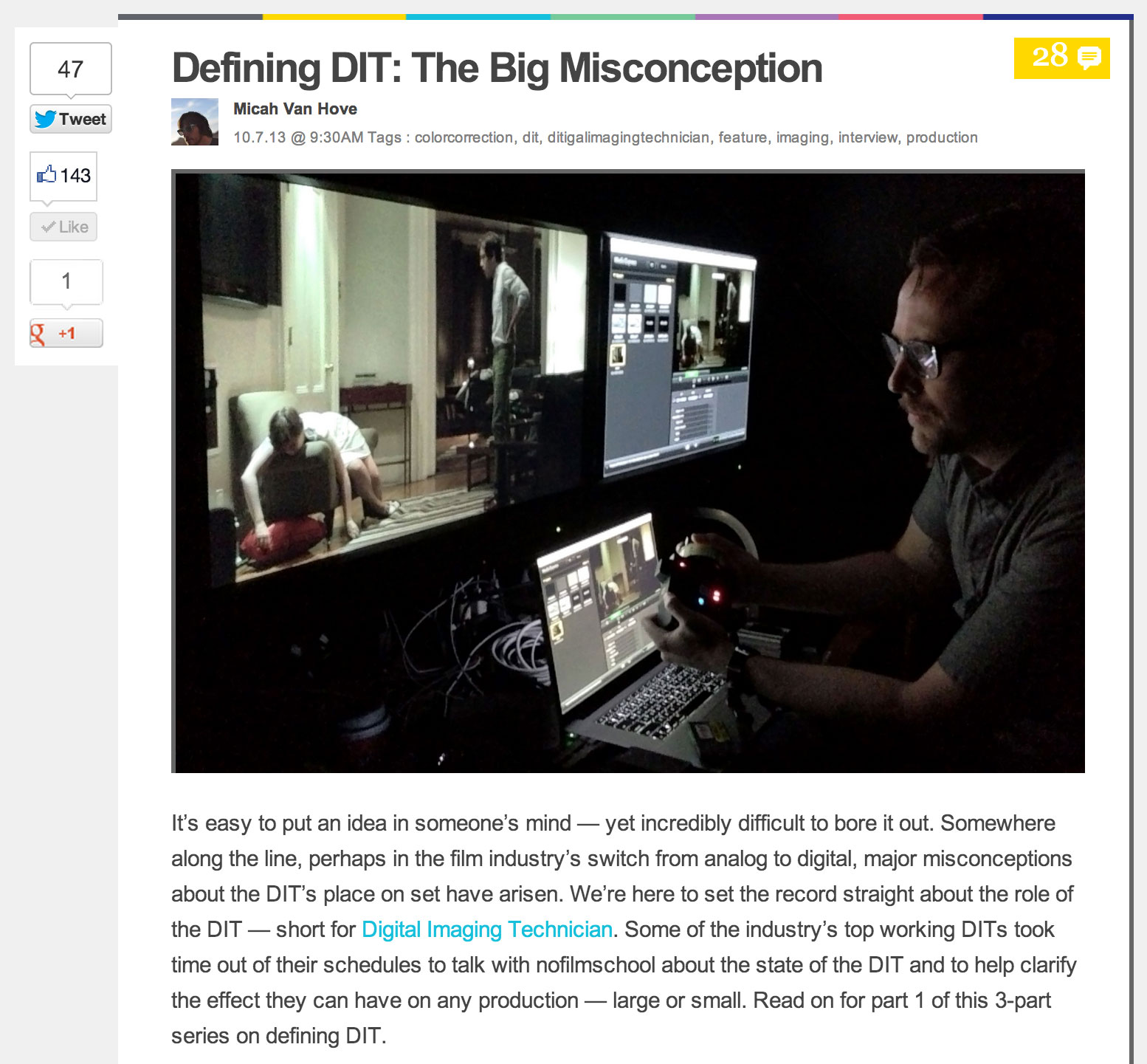

Identifying problematic light sources and gelling them correctly can be a very time and labor intensive process and one that doesn't happen on the set as often as it should so is usually left up to the digital toolset. There is now a whole host of affordable softwares that can be used on the set at the time of photography like LiveGrade or LinkColor or later in post production - such as Resolve, Scratch, Express Dailies, and countless others.

When we're talking about On-Set Color Correction, we're usually talking about ASC-CDL or Color Decision List. CDL is a very useful way to Pre-Grade or begin color correction at the time of the photography. This non-destructive color correction data is very trackable through post production and can be linked to its corresponsing camera media through metadata with an Avid ALE. When implemented successfully, the Pre-Grade can be recalled at the time of finishing and be used as a starting point for final color. In practice, this saves an enormous amount of time, energy, and consequently.. $$$.

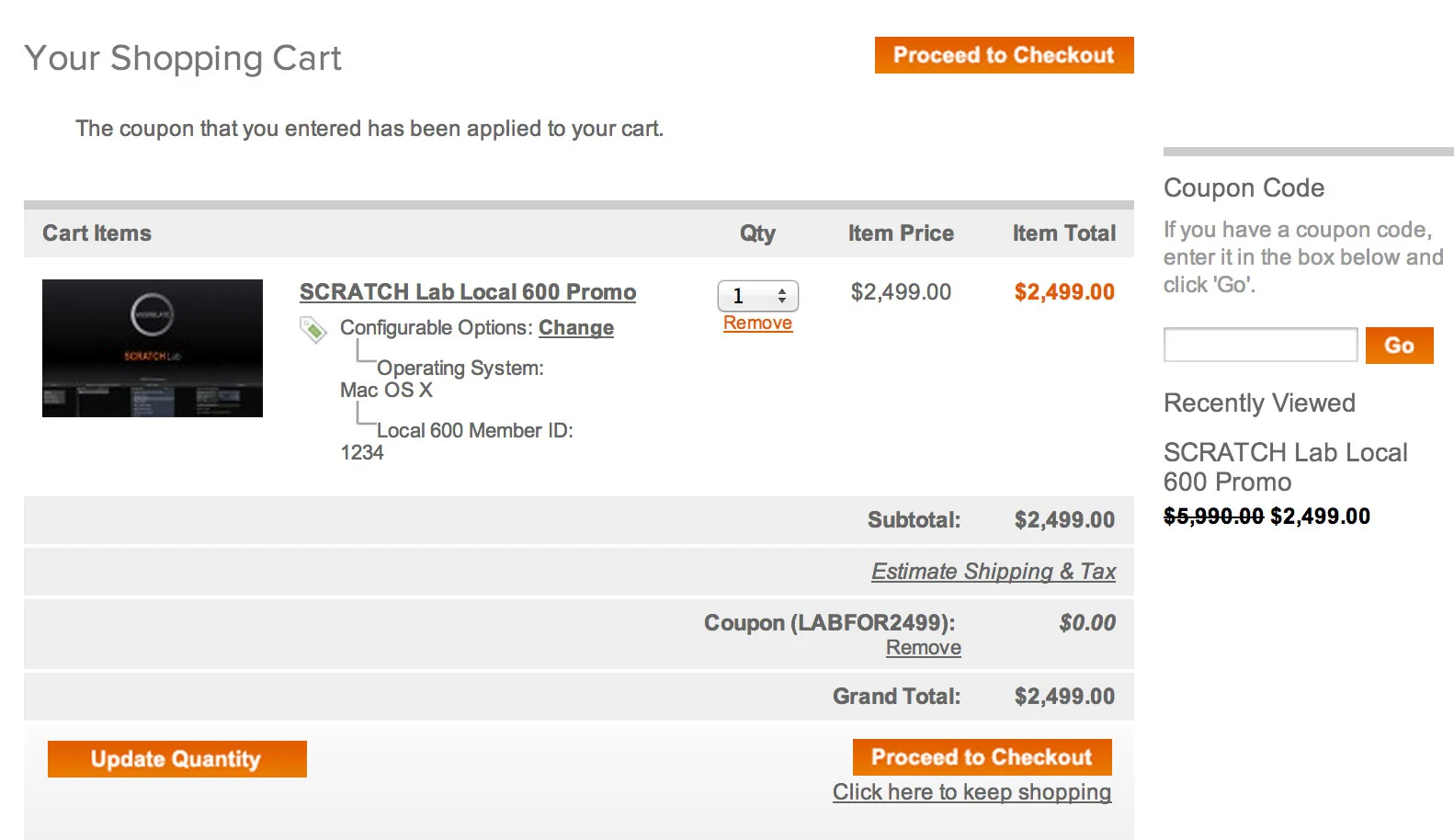

Here's one way an ALE with the correct CDL information can be generated in Assimilate Scratch Lab:

In the top level of Scratch, here's our old friend the Chip Chart. Hooray!

© 2021 Bennett Cain / All Rights Reserved /

© 2021 Bennett Cain / All Rights Reserved /